NoQL

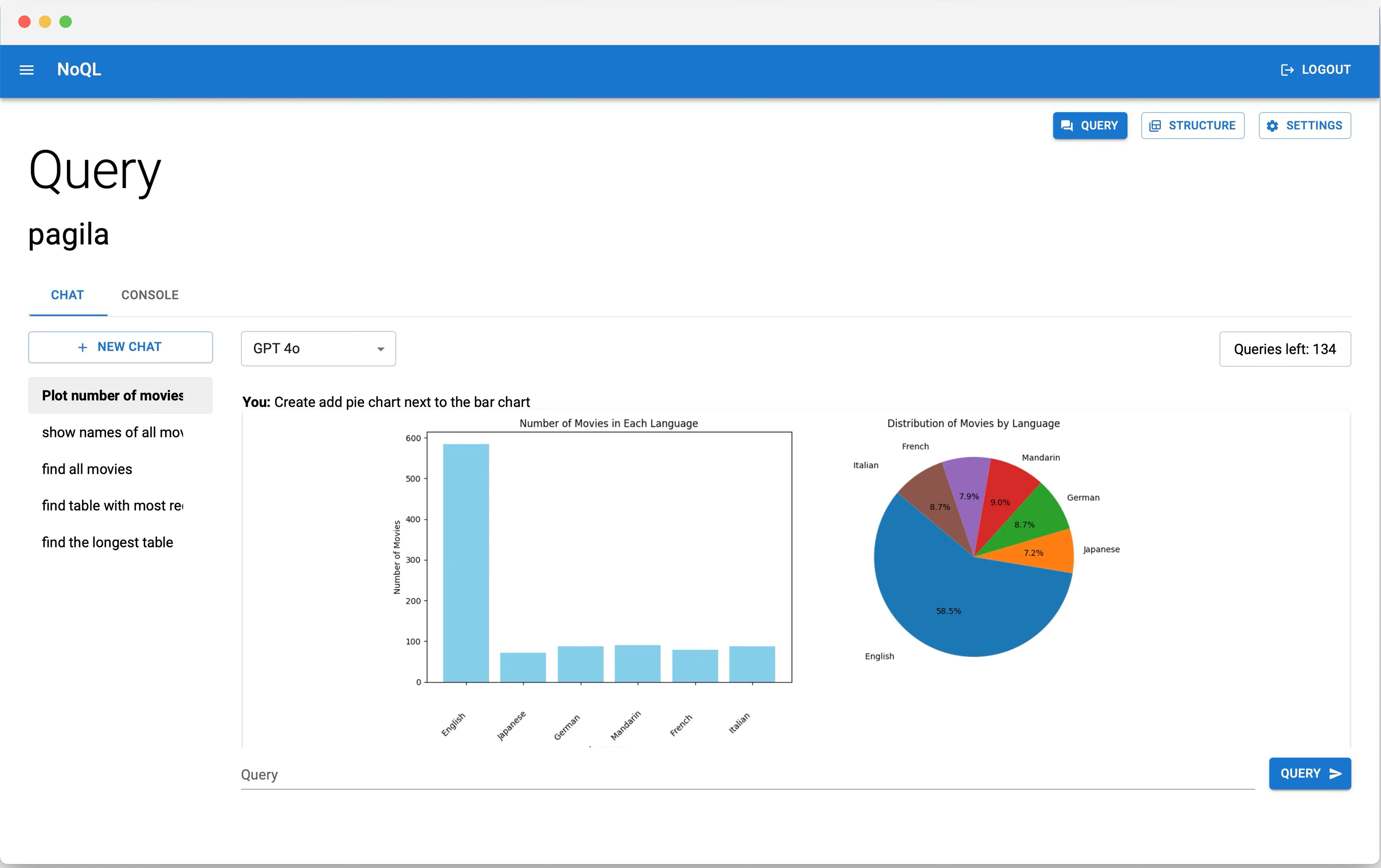

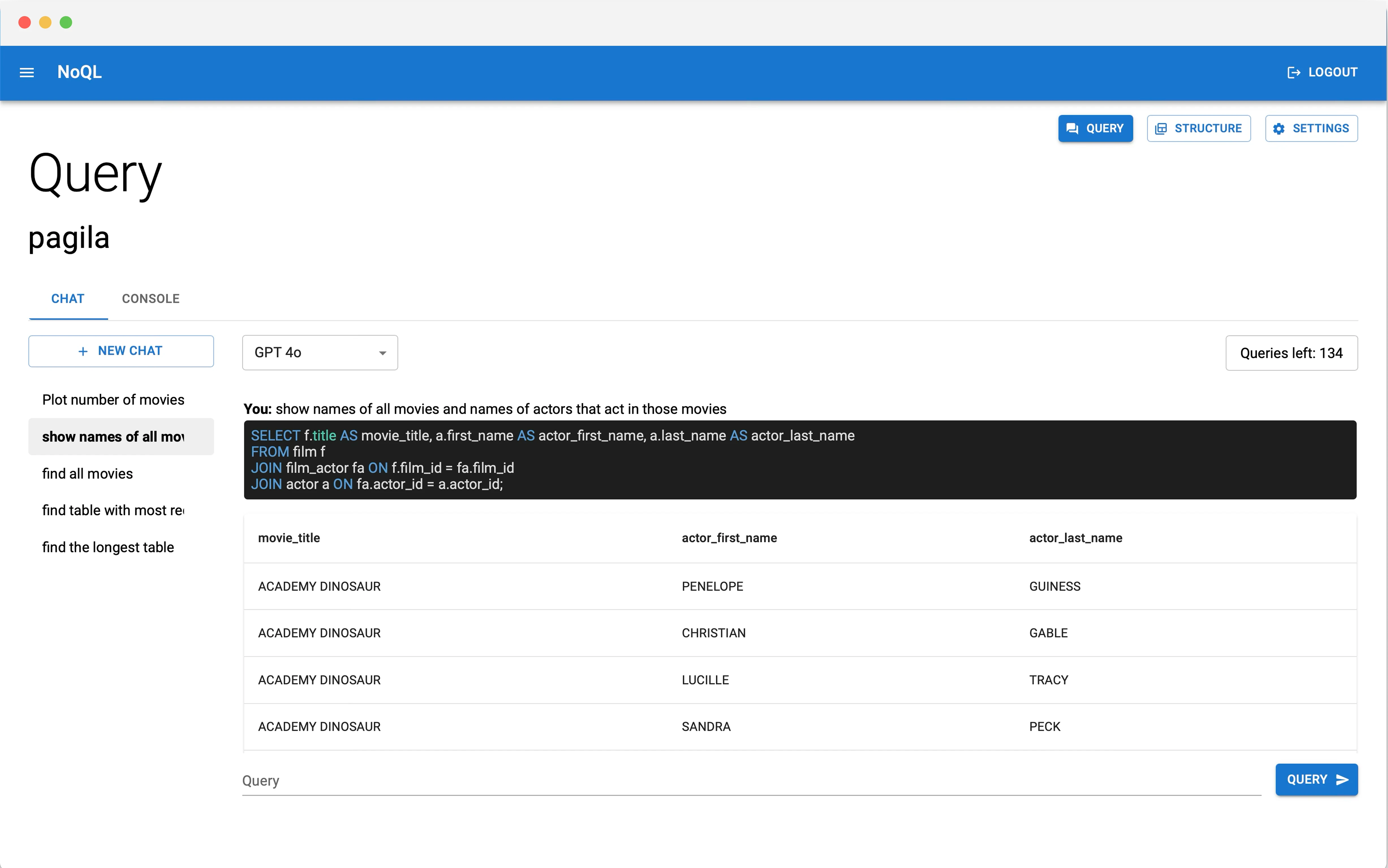

NoQL (No Query Language) is an AI tool for data analysis. It lets users connect to data sources like PostgreSQL, query them using natural language, and visualize results with tables and charts.

The backend is built in Java with the Spring framework and uses PostgreSQL for data persistence. It integrates various LLMs, including OpenAI GPT, Google Gemini, Claude Haiku, and LLaMA. The frontend is a single-page app built with TypeScript, React.js, and Material UI, connected via a REST API.

- Java

- Spring

- Postgres

- LLM

- GPT

- Docker

- Python

- React.js

- TypeScript

Project Goals

I created this project to achieve several goals. First, I wanted to experiment with cutting-edge technologies, particularly large language models, and explore their capabilities. Additionally, I aimed to learn the React.js framework, and this project provided the perfect opportunity to do so.

Realization

I started by outlining the core features and selecting the appropriate technologies, then proceeded with backend development.

The backend was built using Java and the Spring framework, with PostgreSQL chosen for data persistence. The application follows the MVC architectural pattern, and the database runs inside a Docker container. Authentication and authorization are handled via JWT tokens using Spring Security. Users can select from a list of LLM models, which convert their natural language queries into SQL (for table visualizations) or Python (for chart visualizations such as bar, pie, or line charts).

The frontend was developed as a single-page application using React.js, with TypeScript chosen over JavaScript for better developer experience and type safety. For the UI, I used the Material UI component library, and Axios was used for handling API communication.

The application was deployed to an AWS EC2 instance using Docker Compose to manage the containerized services. It is currently not live due to cost-saving considerations.

Challenges

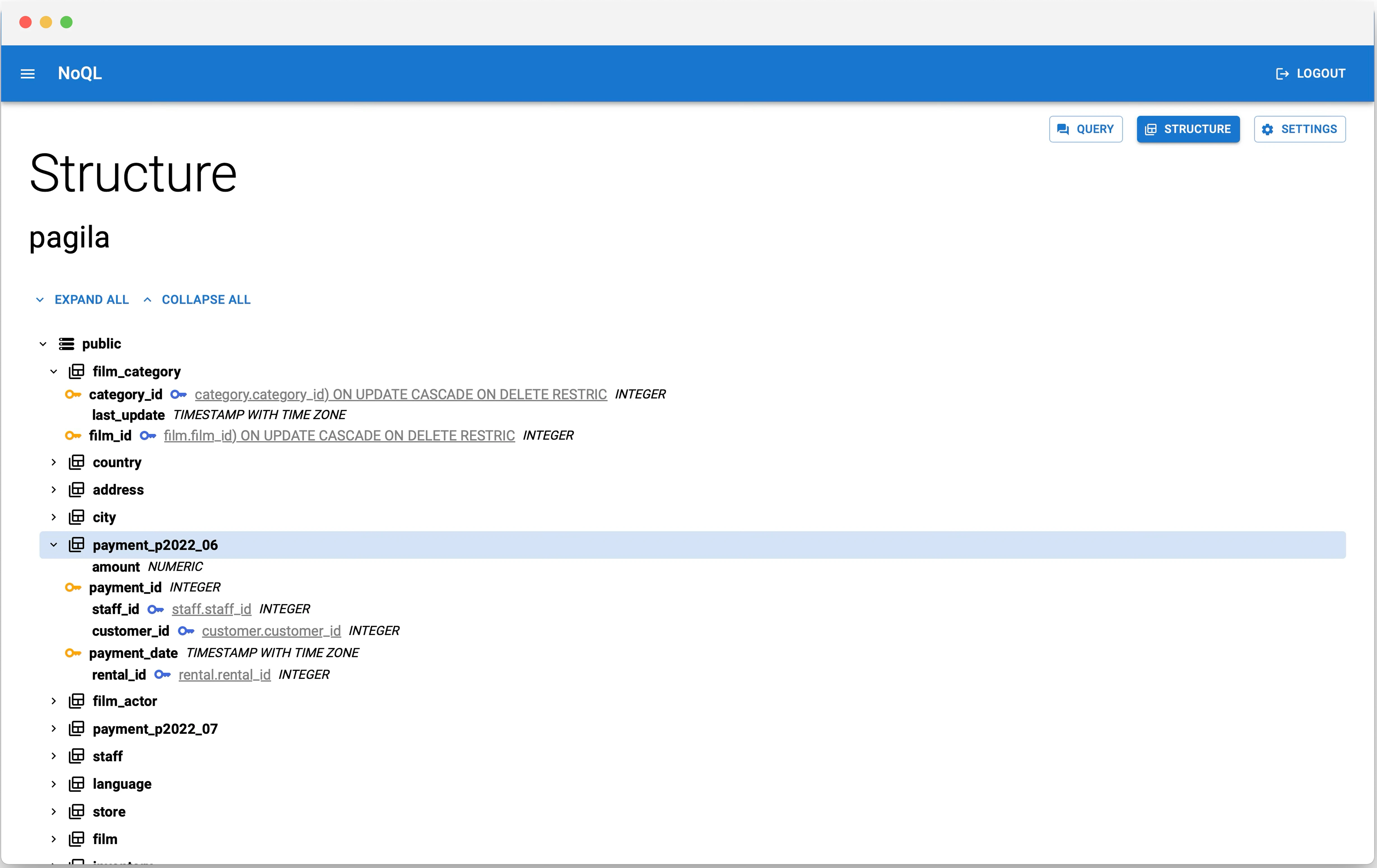

During development, I encountered several challenges. One of the main issues was the rapid pace of AI advancements — LLM models evolve quickly, and I had to frequently adapt my prompts to maintain consistent results across different versions. Another challenge was effectively describing the database structure to the LLM. To solve this, I developed custom heuristics that query system tables to extract schema names, table names, column names, data types, references, and more. This allows the application to automatically understand the database structure without requiring the user to provide it manually.

Conclusion

I developed a functional web application that enables users without knowledge of SQL or Python to analyze data using AI. Through this project, I gained valuable experience working with large language models and deepened my understanding of the React.js framework.